How To Download Spark Email For Mac 1.2.3

Quick Start • • • • • • This tutorial provides a quick introduction to using Spark. We will first introduce the API through Spark’s interactive shell (in Python or Scala), then show how to write applications in Java, Scala, and Python. To follow along with this guide, first, download a packaged release of Spark from the. Since we won’t be using HDFS, you can download a package for any version of Hadoop. Note that, before Spark 2.0, the main programming interface of Spark was the Resilient Distributed Dataset (RDD). After Spark 2.0, RDDs are replaced by Dataset, which is strongly-typed like an RDD, but with richer optimizations under the hood.

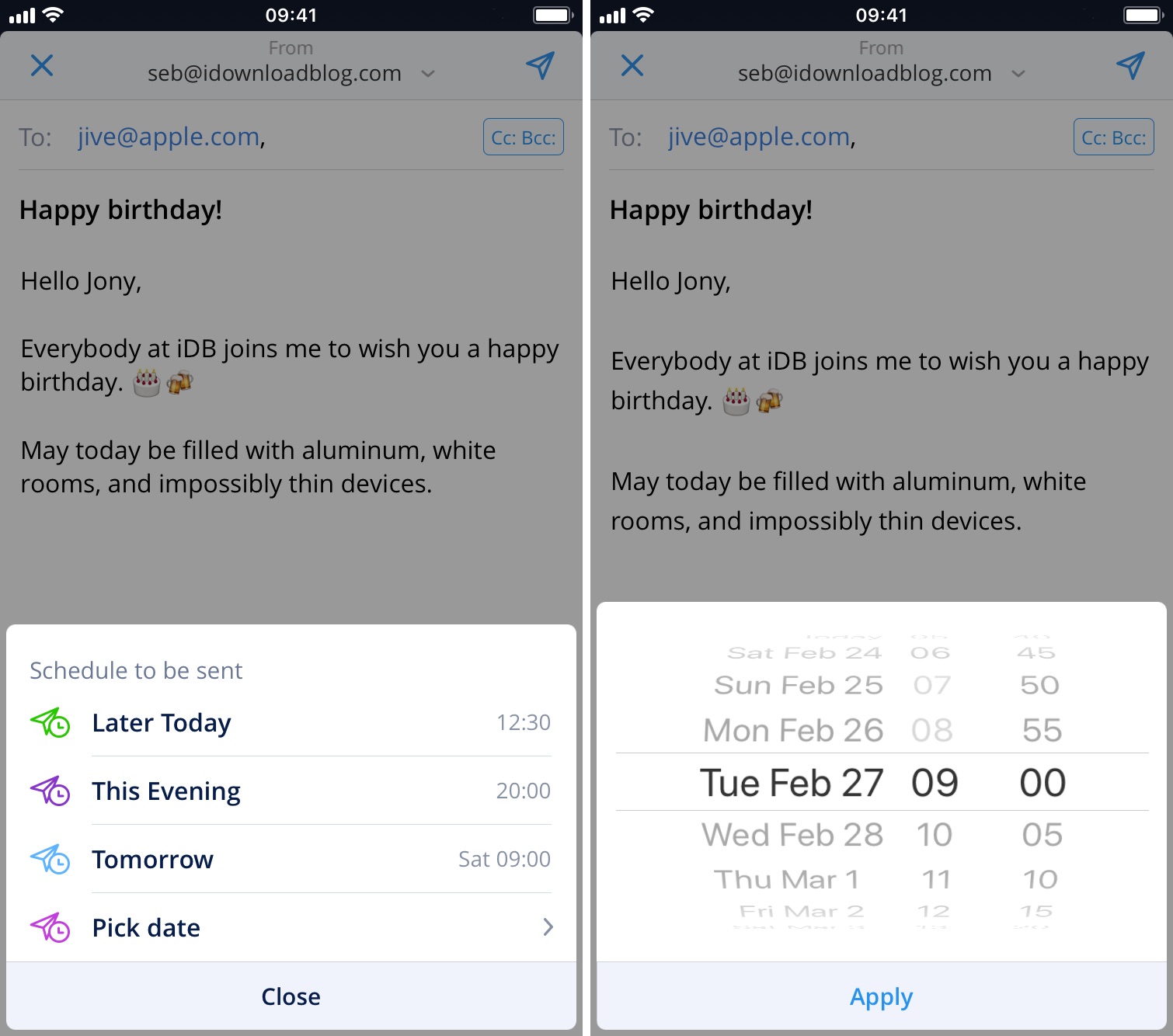

Spark The Future of Email. The best personal email client. Revolutionary email for teams. Free download. Free download. Why 1,000,000 people love Spark? Send email later. Follow up reminders. Built-in Calendar. App Store and Mac App Store is a service mark of Apple Inc., registered in the U.S. And other countries.

Quicken for mac 2016 review. How can I get academic software deals through OnTheHub? There are two main ways: 1) Your school or department web store. Find out what savings your school is offering with our School Search!And 2) The OnTheHub eStore.We carry software that are essential for students – including SPSS, Microsoft, and more – for up to 90% off retail price! Readdle’s beloved Spark email app for iPhone and Apple Watch is also coming to the iPad and Mac. A spokesperson for the company confirmed that development is underway for the apps in an email to. Spark for Mac helps cut down on the amount of time users spend managing email with a Smart Inbox, four configurable gestures in the message list, and Quick Replies for one-click responses in a flash. CleanMyMac 3 (they have a Mojave beta version ready, email their support on support@macpaw.com to get hands on it) (Version 3.10.0b1 appears to work and is available for download) Cocktail Evernote (-> Evernote 7.3 Beta 1 now works with Mojave).

The RDD interface is still supported, and you can get a more detailed reference at the. However, we highly recommend you to switch to use Dataset, which has better performance than RDD. See the to get more information about Dataset. Interactive Analysis with the Spark Shell Basics Spark’s shell provides a simple way to learn the API, as well as a powerful tool to analyze data interactively. It is available in either Scala (which runs on the Java VM and is thus a good way to use existing Java libraries) or Python. Start it by running the following in the Spark directory. ./bin/spark-shell Spark’s primary abstraction is a distributed collection of items called a Dataset.

Datasets can be created from Hadoop InputFormats (such as HDFS files) or by transforming other Datasets. Let’s make a new Dataset from the text of the README file in the Spark source directory: scala > val textFile = spark. TextFile ( 'README.md' ) textFile: org.apache.spark.sql.Dataset [ String ] = [ value: string ] You can get values from Dataset directly, by calling some actions, or transform the Dataset to get a new one. For more details, please read the.

Scala > textFile. Count () // Number of items in this Dataset res0: Long = 126 // May be different from yours as README.md will change over time, similar to other outputs scala > textFile. First () // First item in this Dataset res1: String = # Apache Spark Now let’s transform this Dataset into a new one. We call filter to return a new Dataset with a subset of the items in the file. Scala > val linesWithSpark = textFile.

Avast cleanup premium torrent. Filter ( line => line. Contains ( 'Spark' )) linesWithSpark: org.apache.spark.sql.Dataset [ String ] = [ value: string ] We can chain together transformations and actions: scala > textFile. Filter ( line => line.

Contains ( 'Spark' )). Count () // How many lines contain 'Spark'? Res3: Long = 15. ./bin/pyspark Or if PySpark is installed with pip in your current environment: pyspark Spark’s primary abstraction is a distributed collection of items called a Dataset. Datasets can be created from Hadoop InputFormats (such as HDFS files) or by transforming other Datasets.